Emily Lakdawalla • May 13, 2014

A new Earthrise over the Moon from Lunar Reconnaissance Orbiter's pushframe camera

Earth's brilliant colors shine above the drab lunar horizon in this new "Earthrise" photo from the Lunar Reconnaissance Orbiter:

When the Lunar Reconnaissance Orbiter Camera (LROC) team posted this photo, they also shared a slightly different version of it. The animation below contains 149 frames, and is the full data set acquired by the wide-angle camera for this observation. It's a little difficult to understand, though. What are the black bars crossing the image? Why is it so skinny top-to-bottom? How did this grayscale animation get turned into the color image at the top of the page? To answer these questions, I'll have to explain a bit about how the LROC Wide-Angle Camera works.

To begin with: the LROC WAC is a pushframe imager. What does that mean? It's a little unusual, in between the two most common basic designs for space cameras. Some space cameras are framing cameras: you snap a single photo and it gets captured on a rectangular detector, a snapshot of a moment in time. Cassini's cameras are like that, as are the cameras on the various Mars rovers. Other space cameras are "pushbrooms" -- the detector is actually a single line of pixels, and it sweeps across the planet as the spacecraft moves in its orbit, building up long skinny image swaths over time. Mars orbiting cameras like HiRISE and MOC and HRSC are all pushbroom cameras, as is the narrow-angle camera on Lunar Reconnaissance Orbiter.

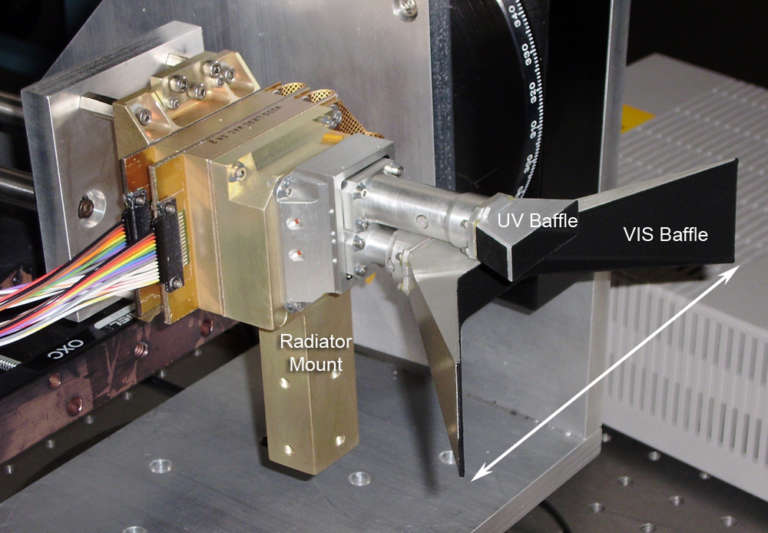

A pushframe camera is a hybrid of these two. LROC WAC has a rectangular detector, like a framing camera. That detector has seven different color filters glued to it. I couldn't find a diagram of the LROC WAC focal plane, but LROC WAC is a direct descendant of the MARCI camera on Mars Reconnaissance Orbiter, so here's how MARCI's detector is organized. It's a CCD detector, like most framing cameras, but rectangular filters cover different parts of the CCD. The wavelengths of those filters on MARCI are similar but not quite identical to the ones on LROC WAC.

LROC WAC has two different sets of optics, one optimized for visible wavelengths and one for ultraviolet. Five of the filters on the LROC WAC focal plane are visible, and two are ultraviolet. Ultraviolet data have about five times lower resolution than visible data.

Light passing through the optics is focused on the detector, and LROC WAC takes a snapshot. But the image on the frame consists of seven distinct framelets, one for each filter, each of them a skinny rectangle, 1024 pixels wide and 14 pixels tall for most wavelengths. As the spacecraft moves in its orbit, WAC repeatedly snaps individual frames timed so that each framelet of each color band overlaps by just a little bit from one snapshot to the next. That makes it like a pushbroom camera. Since it's a hybrid of framing camera and pushbroom camera, it's called a pushframe camera.

LROC WAC is operated in two different modes, monochrome and color. In monochrome mode, they only use the 605-nanometer framelet. Here is an example of raw monochrome image data, selected at random from the data set. Each of the strips is a 1024-by-14-pixel framelet. Making this into a more familiar-looking photo requires cutting the strips apart and mosaicking them into a long image swath.

In color mode, they take data from all seven filters. Below is an example of raw color image data. You can see how there are five color strips for the visible wavelengths, and two much smaller strips for the ultraviolet wavelengths. Making color images involves cutting and mosaicking the many strips into seven different image swaths, one for each wavelength, and then registering them together.

One thing that surprised me when I checked out the color images is that they are only 704 pixels wide, which is not the full 1024-pixel width of the detector. I asked LROC principal investigator Mark Robinson why color images were narrower than monochrome ones, and he told me: "Because we can't read out the full CCD fast enough due to the fact that LRO is moving in its orbit about 1600 m per second! If we did read out all 1024 pixels in all bands we would end up with little gaps between the framelets." If they read out fewer pixels, they can read the data fast enough to be ready to snap the next image as the spacecraft travels rapidly around the Moon.

Here, I've taken the Earthrise animation above and colored on top of it to show you how each framelet is an image captured through a different-color filter. The filters are blue (415 nanometers), green (565 nanometers) and then three different red wavelengths (605, 645, and 695 nanometers). Earth shows up first in the 605-nanometer red framelet, and then over the time of the observation it crosses into the green and blue framelets. The Moon only appears in the red framelets.

So the Moon showed up in only red-filter data -- the Moon part of the Earthrise image is not in color. Indeed, the LROC team says so in their post about the Earthrise image: "the Moon is a greyscale composite of the first six frames of the WAC observation (while the spacecraft was still actively slewing), using visible bands 604 nm, 643 nm, and 689 nm." Then Earth showed up sequentially in red, green, and blue filters -- just as any feature on the lunar surface does in the course of LROC WAC's ordinary operation. The LROC team made the still, color image of Earth by compositing parts of red, green, and blue frames captured over the course of the WAC observation.

It gives me a greater sense of awe to understand the tricky technical feats required to capture the beautiful images returned from our space robots!

Support our core enterprises

Your support powers our mission to explore worlds, find life, and defend Earth. You make all the difference when you make a gift. Give today!

Donate

Explore Worlds

Explore Worlds Find Life

Find Life Defend Earth

Defend Earth