Raymond Francis & Tara Estlin • Mar 13, 2018

Automating Science on Mars

NASA has sent a robot to Mars that autonomously picks out targets to zap with its laser, blasting a little bit of rock to plasma. It's for science!

Since 2016, NASA’s Curiosity Mars rover has had the ability to choose its own science targets using an onboard intelligent targeting system called AEGIS (for Automated Exploration for Gathering Increased Science). The AEGIS software can analyze images from on-board cameras, identify geological features of interest, prioritize and select among them, then immediately point the ChemCam instrument at selected targets to make scientific measurements.

Autonomous targeted science without Earth in the loop is a new way to operate a scientific mission. It has become a routine part of the MSL Science Team’s strategy for exploring the ancient sedimentary rocks of Gale Crater.

AEGIS is an example of what we call ‘science autonomy’, where the spacecraft (the rover in this case) can make certain decisions on its own about scientific measurements and data – choosing which measurements to make, or having made them, which to transmit to Earth. This is distinct from autonomy in navigation, or in managing onboard systems – both of which Curiosity can also do. In a solar system that’s tens to hundreds of light-minutes across, science autonomy allows us to make measurements that can’t be made with humans in the loop, or to make use of periods where our robotic explorers would be waiting for instructions from Earth.

Why

A typical sol’s activity plan for the rover often includes a period of targeted science observations, followed by a drive to a new location, and post-drive science activities. The first period of targeted activities involve the science team (on Earth) making decisions about where to point science instruments at surface features. To make those choices, the team has to see those features in images that have already been downlinked from the rover to Earth. This isn’t possible for post-drive science. Images from the rover’s location after the end of a drive won’t be available on Earth until shortly before the next day’s operations planning begins. So post-drive science activities are limited to observations that don’t need fine targeting: wide-field panoramas, images of the sun or the sky for atmospheric studies, analyses with the onboard geochemical lab instruments, et cetera. With autonomous targeting, we can also use this post-drive time for ChemCam measurements, getting a head-start on the next day’s work, or increasing the amount of ChemCam data we can get from this location before driving away again.

Another challenge of targeted science is fine pointing. Some of the most interesting features are the smallest ones – the calcium sulfate veins found in rocks along the rover’s traverse, for example, have revealed important insights into the chemistry of the watery environment in Gale’s past. But these veins are often less than 5 millimeters wide, meaning that a pointing error of only a fraction of a degree can mean missing them with ChemCam from a few meters away. It's hard to pinpoint such small targets. Our stereo model of the rover’s surroundings (made from NavCam images) has resolution limits, and we can only control the motion of the mast so precisely. With AEGIS, we can do autonomous pointing refinement, where the software can correct small errors in the pointing of observations commanded by the team on Earth, ensuring we hit the desired targets on the first try (and saving valuable time on Mars).

History

AEGIS originally began in the early 2000s as part of a JPL research project that was developing autonomy technology for future rover missions. This effort developed a large, integrated suite of rover technology that enabled a number of autonomous behaviors, including autonomous navigation, onboard commanding and re-planning, and autonomous science. The goal of the autonomous science element was to help scientists collect valuable science data at times when the rover wasn’t in frequent communication with Earth. Several planetary geologists worked closely with the project to help us design software that could autonomously identify scientific features (such as rocks) in visual images and decide which targets would be the most interesting to scientists. Project technology was tested extensively on multiple research rovers in the JPL Mars Yard. Testing of the autonomous science element, which was eventually called AEGIS, typically involved identifying various terrain features (e.g., volcanic rocks or an ancient river bed) during a rover drive and then working with other autonomy software to redirect rover activities towards collecting data on identified science targets.

Based on the results of this project, the Mars Exploration Rover (MER) Mission authorized AEGIS to be uploaded to the Opportunity rover in order to support autonomous selection of targets for remote sensing instruments. In 2009, AEGIS was successfully uploaded to Opportunity where it has been used to identify and acquire targets of interest for the narrow field-of-view MER Panoramic Camera. MER Pancam is a high-resolution, multi-spectral imager that acquires images at various wavelengths. These images help scientists learn more about the minerals found in Martian rocks and soils. AEGIS was used on MER to acquire Pancam data on rocks with certain properties at times where otherwise such data would not be possible. It has been used successfully on MER to acquire data on outcrop, cobbles, crater ejecta, and boulders.

ChemCam

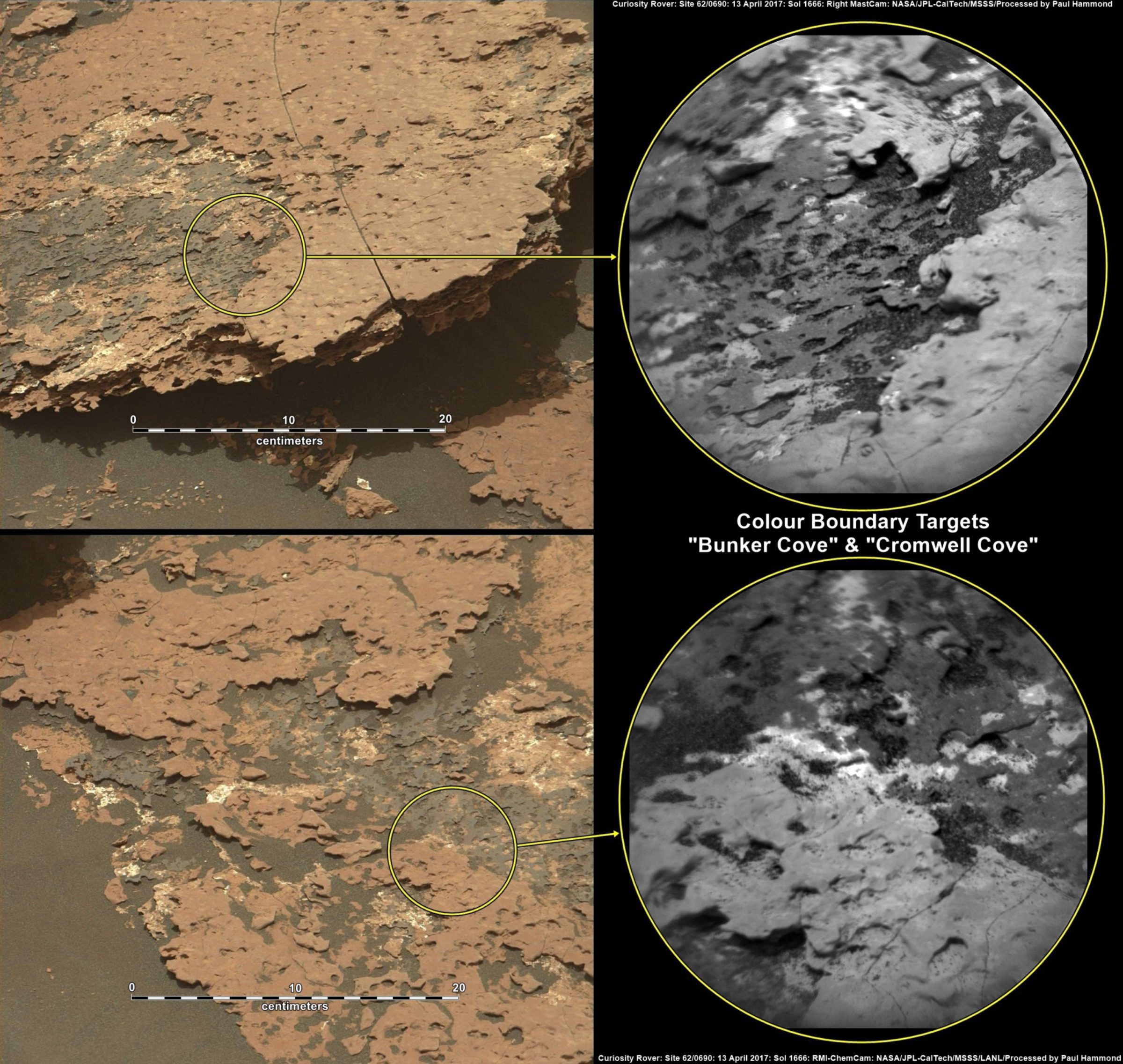

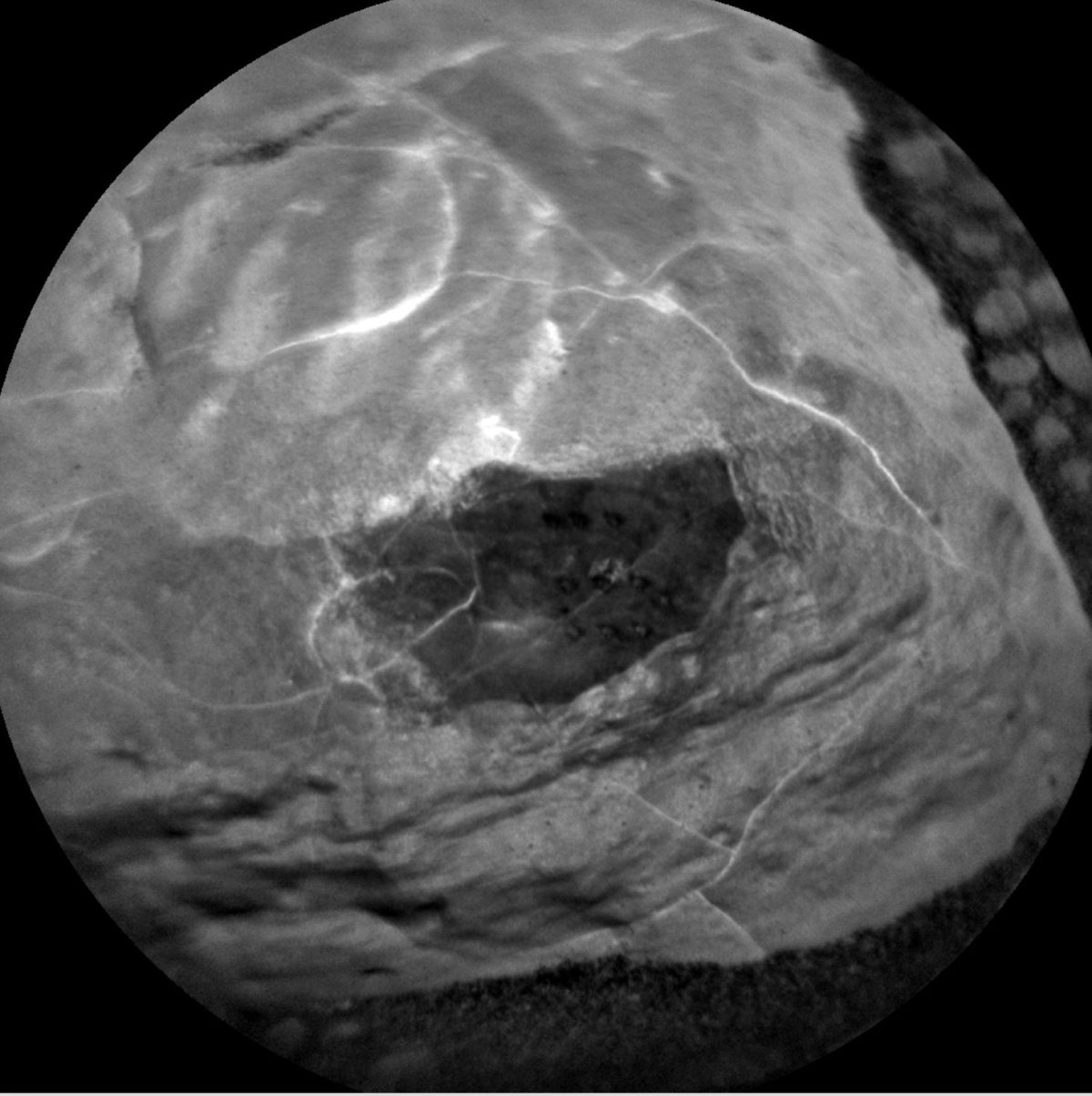

Following the success of AEGIS on MER, new opportunities on the Mars Science Laboratory mission were explored, especially for the ChemCam instrument. ChemCam is a perfect candidate for AEGIS intelligent targeting. The instrument combines a laser-induced breakdown spectrometer (LIBS) system with a context camera called the remote micro-imager (RMI). The RMI has a very narrow field of view, about 1 degree in diameter. The LIBS focuses its powerful laser on rocks as far away as 7 meters from the rover, and captures spectra from the plasma produced. The spot measured is typically less than 1 millimeter across, so targeting is important – you want that spot to fall on something interesting.

A typical ChemCam observation combines a LIBS raster (several LIBS shots on each of a series of points clustered together on a target) with pre- and post-LIBS RMI images, and sometimes intermediate RMIs. The science team uses ChemCam often, usually measuring several targets each time the rover stops to sample the variety of materials we observe. It’s also important to get ChemCam data frequently along the rover’s traverse, so we can get a rich geochemical survey of the terrain over distance and elevation changes.

These characteristics mean that precise and specific targeting is valuable for ChemCam, and that the science mission would be enhanced by more frequent observations – and these are things that AEGIS’ autonomous intelligent targeting can deliver.

How it works

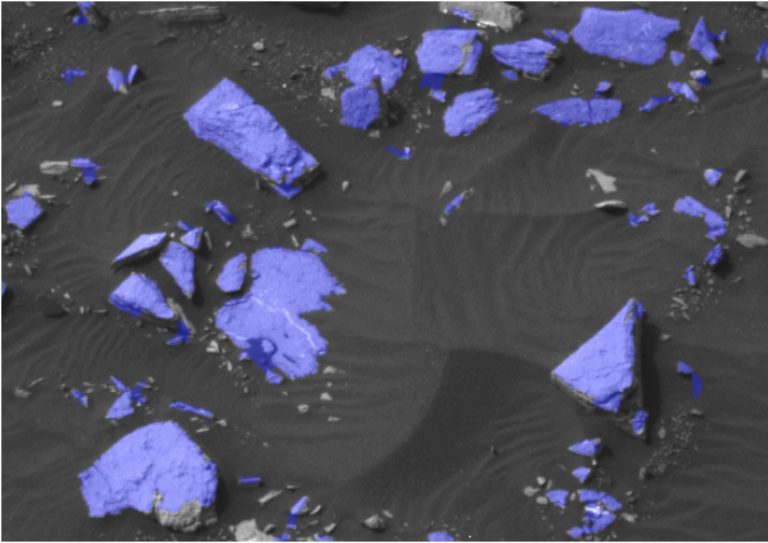

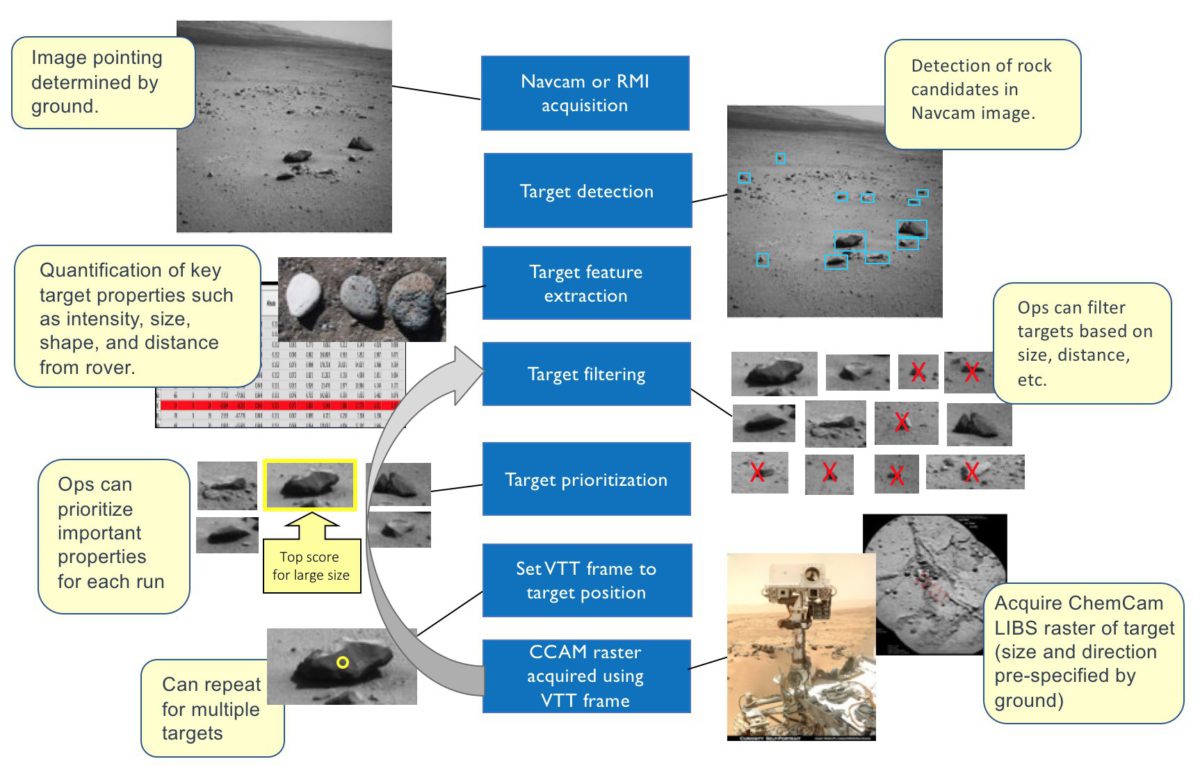

The AEGIS autonomous targeting process begins with taking a ‘source image’ – a photo with an onboard camera (either the NavCam or the RMI). AEGIS’ computer vision algorithms then analyze the image to find suitable targets for follow-up observations. On MER and MSL, AEGIS uses an algorithm called Rockster, which attempts to identify discrete objects by a combination of edge-detection, edge-segment grouping and morphological operations – in short, it finds sharp edges in the images, and attempts to group them into closed contours. Built originally to find float rocks on a sandy or gravelly background, Rockster has proved remarkably versatile at finding a variety of geological target types.

With a list of targets identified in the image, AEGIS inspects the pixels enclosed within their identified boundaries, identifying a long list of properties – average brightness, overall shape, distance from the rover (using NavCam stereo), and more. It can then filter out targets based on defined criteria (rocks that are too small, or too far away for ChemCam’s 7-meter range, for example), then rank targets according to their similarity to an optimum set of parameters set by the science team (and adjustable for each run). This combination of imaging, target-finding, filtering, and ranking settings guides AEGIS to find different types of targets in different environments and is known as a ‘scene profile’. Many such profiles can be defined for different science goals, and can be adjusted as he rover drives into new terrain with new geological materials.

Rarely mentioned, but essential to the system’s use, are built-in safety routines to protect both Curiosity and ChemCam. The LIBS laser is powerful enough that shooting at the rover could damage the vehicle, so AEGIS includes checks to ensure it never picks a target on or too near the rover itself. And ChemCam’s laser is focused through a telescope which, if pointed at the sun, could cause damage to the instrument itself, so AEGIS also excludes targets too near the sun (a surprising, but real risk, even when pointing at rocks on the ground).

Delivering AEGIS to MSL

Following work to adapt AEGIS to MSL and to ChemCam, including processing RMI images and targeting the LIBS laser, the system was extensively tested at JPL on the Vehicle System Testbed (VSTB) – the Curiosity rover’s well-known terrestrial twin. The tests demonstrated the software worked correctly, and could run in parallel with other typical background operations on the rover. Importantly, it also confirmed the safety checks for laser and sun safety. After these tests, AEGIS was uploaded to MSL, and installed in the rover’s flight software in October 2015.

An extensive suite of on-board checkouts confirmed its safe and accurate behaviour over the following months. These were a series of experiments in which the software ran and processed images under various conditions, selecting targets and gradually being given more authority to command responses to its decisions, culminating in being allowed to select targets, point ChemCam at them, and fire the laser in a series of pulses as occurs during standard scientific measurements. These checkouts took place over several months, partly to allow the AEGIS team to assess performance after each round, but importantly, to fit in between activities of the ongoing MSL science mission. Time on Mars is valuable, and the period after AEGIS upload included the first phase of the Bagnold Dunes Campaign in January 2016, activities for which had been carefully planned by mission scientists. Checkouts were completed by February of 2016, and with some subsequent training for the mission scientists, the rover ops team, and the ChemCam ops team, AEGIS was released for use in routine science operations in May 2016.

Results from MSL

Since then, AEGIS has rapidly become a regular part of the team’s exploration with Curiosity. The pointing-refinement mode is used when the ChemCam team believes a feature is small enough that there’s some risk of missing it on the first try – a little extra time for AEGIS to acquire and analyze the source image is the price of ‘pointing insurance’ that can save having to retarget the feature the next sol – or miss it altogether, if the rover drives away. In every run so far, AEGIS has improved the pointing commanded form Earth, and in some cases saved ChemCam from missing the target.

The autonomous targeting mode has been especially heavily used – more often than not, when Curiosity drives, it does AEGIS-guided ChemCam soon after. Running after most drives means that AEGIS has acquired ChemCam observations on over 130 targets (as of March 2018), and significantly increased the data rate from ChemCam. These are all targets which wouldn’t have been observed without onboard autonomy – they used post-drive time when targeted observations aren’t possible, because images of the new location haven’t yet been seen by the team on Earth.

The team has also learned to use the system to help guide, or complete, their exploration of an area. ChemCam data on the AEGIS targets is sometimes delivered to Earth in time to guide target selection for the next sol. This has often resulted in the team following up on interesting chemistry seen in the AEGIS target, and guided interpretation of features seen in images of the rover’s surroundings. As well, when time is tight, the previous sol’s post-drive AEGIS targets have often helped complete the geochemical survey of a site – if there are three distinct geological units in the rover’s workspace, but AEGIS has already measured one of them, the team can focus their efforts on the other two. On the data analysis side, certain scientific studies, which rely on statistics of the variability of materials or the closer spacing of observations along the rover’s traverse, have benefited significantly from having so many more ChemCam targets.

The future

The success of AEGIS on MSL has led to its inclusion in NASA’s next Mars rover mission, to launch in 2020. That mission will have AEGIS available from the start of its surface operations, once again for targeting mast-mounted instruments. This will include SuperCam, the upgraded successor to ChemCam, with LIBS, RMI, but also Raman and infrared reflectance spectrometers.

The AEGIS team envisions other applications too. There are quite a number of places in the solar system where a robotic explorer can’t always wait around for Earthly operators to choose targets. Smarter robots with autonomous science capabilities will likely play an important role in exploring more challenging destinations, and mission designers and operators will learn to use these systems to share responsibility for decision-making with their distant robotic systems.

Support our core enterprises

Your support powers our mission to explore worlds, find life, and defend Earth. You make all the difference when you make a gift. Give today!

Donate

Explore Worlds

Explore Worlds Find Life

Find Life Defend Earth

Defend Earth